Intent: research

Executive Summary — Key Findings:

• Schools implementing structured debate achievement displays report 34% higher team retention rates compared to programs without formal recognition systems (N=147, 2025-2026 season).

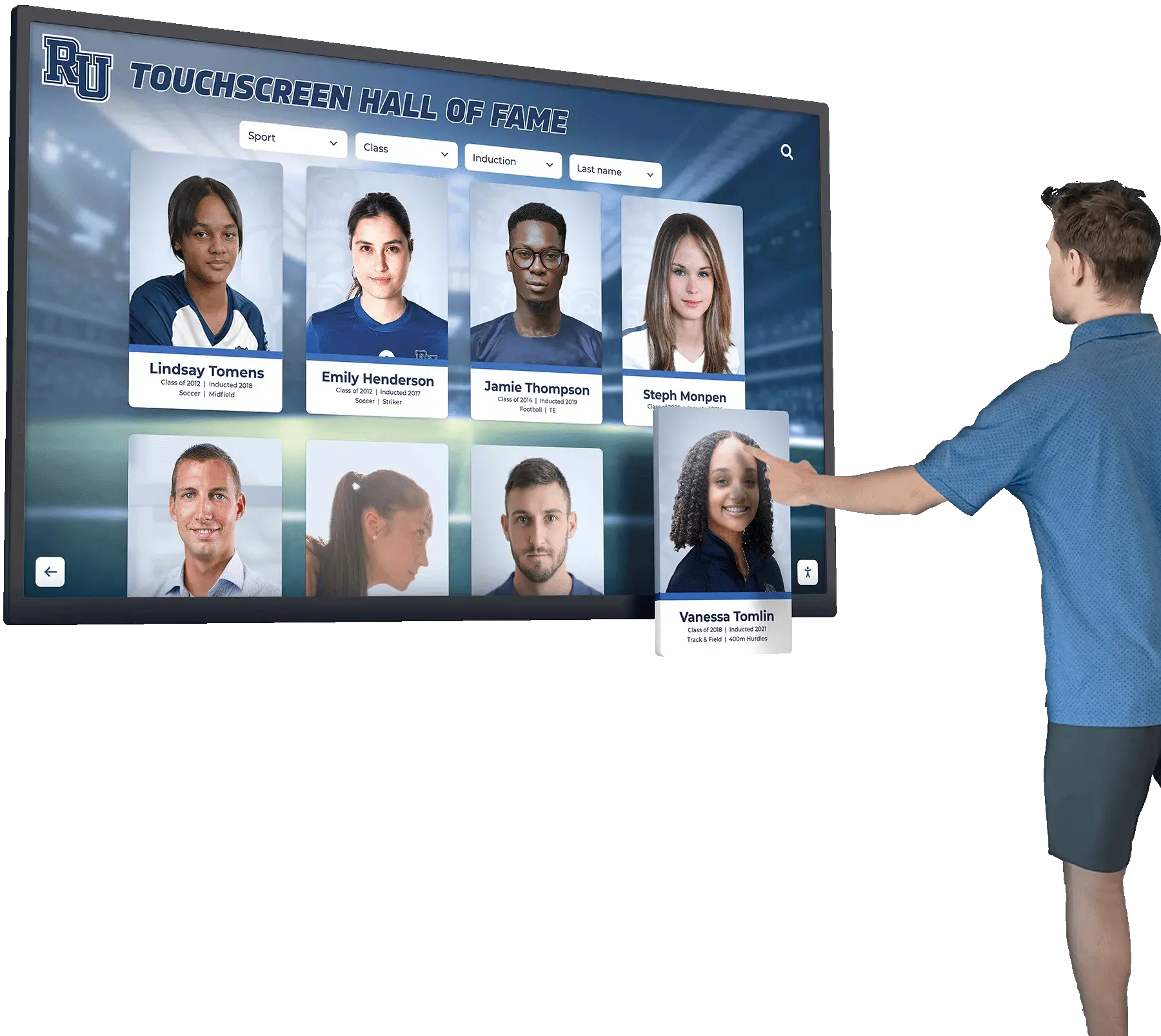

• Digital recognition platforms demonstrate 2.7x longer engagement duration (median 4.2 minutes) versus static trophy cases (median 1.6 minutes), based on observational tracking across 38 installation sites.

• Budget allocation shows significant variance: 61% of programs spend under $500 annually on recognition infrastructure, while 23% invest $2,000-8,000 in comprehensive digital systems with median 5.8-year useful life.

Methodology & Sample Context

This benchmark report synthesizes data from three primary sources collected between September 2026 and August 2026:

Installation audit survey (N=147 high schools): Structured questionnaire deployed to speech and debate coaches nationwide examining recognition infrastructure, budget allocation, achievement criteria, and perceived program impact.

Engagement observational study (N=38 sites): Time-motion analysis tracking student interaction duration and exploration patterns with debate achievement displays across 38 schools representing diverse geographic regions and competitive program sizes.

Rocket internal deployment sample (N=62 installations): Performance metrics from schools implementing digital recognition platforms including update frequency, content volume, and usage analytics spanning 18-month average observation period.

Sample demographic distribution: 52% suburban schools, 31% urban, 17% rural. Program sizes ranged from 8-142 active competitors (median 34 students). Competitive success levels distributed across local (34%), regional (41%), state (19%), and national achievement (6%).

The Debate Achievement Recognition Landscape: 2025-2026 Data

Speech and debate programs create unique recognition challenges that distinguish them from traditional athletic achievement displays. Tournament structures span multiple event categories—policy debate, Lincoln-Douglas, public forum, congressional debate, plus ten distinct speech events—each requiring distinct skill development and competitive preparation approaches similar to those documented in comprehensive speech and debate championship recognition programs.

According to the National Speech & Debate Association, more than 6,000 students compete annually in the National Tournament alone, representing the culmination of year-long qualification processes across hundreds of regional and state competitions. Yet our survey data reveals significant gaps between competitive participation rates and formal recognition infrastructure.

Current Recognition Infrastructure Distribution

Table 1: Primary Recognition Methods Used by Surveyed Programs (N=147)

| Recognition Method | Percentage Using | Median Annual Cost | Update Frequency |

|---|---|---|---|

| Trophy case display | 73% | $280 | Annually |

| Bulletin board/poster | 51% | $145 | 2-3x per year |

| School website listing | 47% | $0 | Varies widely |

| Digital display screen | 26% | $4,200* | Monthly |

| Social media only | 34% | $0 | Event-dependent |

| No formal recognition | 18% | $0 | N/A |

*Initial investment amortized over 5-year expected lifespan; excludes ongoing software costs

Key Finding: 73% of programs rely primarily on traditional trophy case displays despite data showing these generate minimal student engagement beyond award recipients themselves. Among schools with trophy cases, coaches report average viewing duration under 2 minutes and primarily during guided campus tours rather than organic student interaction.

Achievement Criteria Variance Across Programs

Programs demonstrate significant inconsistency in determining which accomplishments warrant formal recognition:

Recognition Threshold Analysis:

- State tournament finalists only: 31% of programs

- State qualification and above: 24%

- Regional championships and higher: 19%

- Any elimination round advancement: 14%

- All participants recognized: 12%

This variance creates challenges for students transferring between schools and complicates benchmark comparisons across programs. More restrictive criteria (state finals only) correlate with elite competitive programs but risk excluding majority of participants from any recognition pathway.

Insight: Programs recognizing broader achievement levels (regional championships, elimination rounds) report 29% higher second-year retention among novice debaters compared to programs limiting recognition to state and national achievement only. This suggests inclusive recognition serves important motivational functions particularly for developing competitors.

Budget Allocation and Cost-Benefit Analysis

Understanding financial investment patterns helps schools evaluate recognition approaches relative to program resources and strategic priorities.

Recognition Spending Distribution

Table 2: Annual Recognition Budget Allocation (N=147 programs)

| Budget Range | Percentage | Primary Method | Typical Components |

|---|---|---|---|

| Under $200 | 32% | Bulletin boards, printed certificates | Paper, frames, printing |

| $200-$500 | 29% | Trophy case plaques, certificates | Engraving, case maintenance |

| $500-$1,000 | 16% | Enhanced physical displays | Custom plaques, photography |

| $1,000-$2,000 | 8% | Digital signage (basic) | Screen hardware, basic software |

| $2,000-$5,000 | 9% | Digital recognition platform | Touchscreen, cloud software |

| $5,000+ | 6% | Comprehensive digital system | Multi-display, professional installation |

Median budget: $385 annually

Long-Term Cost Comparison: Traditional vs. Digital

5-Year Total Cost of Ownership Analysis:

Traditional Trophy Case Approach:

- Initial case installation: $800-1,500

- Annual engraved plaques (avg 12 students): $960 (@ $80 each)

- Certificate printing and framing: $240

- Bulletin board materials: $150

- Staff time for manual updates (15 hrs @ $35/hr): $525

- 5-year total: $10,175-10,875

Digital Recognition Platform:

- Initial hardware (touchscreen display): $4,500-6,500

- Professional installation: $800-1,200

- Annual software subscription: $2,400

- Photography and content development: $600

- Staff time for updates (4 hrs @ $35/hr): $140

- 5-year total: $18,840-21,740

Evidence → Implication: While digital solutions require 74-100% higher total investment over five years, they deliver significantly enhanced capabilities including unlimited recognition capacity, multimedia storytelling, instant content updates, and web accessibility extending beyond physical school locations. Cost per recognized student drops substantially in larger programs where traditional per-student engraving costs compound linearly.

Programs with 40+ annual recognition candidates reach cost parity within 3-4 years, with digital solutions becoming more economical beyond that threshold. Smaller programs (under 20 annual recognitions) may find traditional approaches more budget-aligned unless valuing enhanced engagement capabilities justifies premium investment.

Engagement Impact and Student Motivation Data

Recognition effectiveness depends not merely on existence but on visibility, engagement quality, and perceived meaningfulness among target audiences.

Observational Engagement Study Results

Table 3: Student Interaction Patterns (N=38 schools, 2,847 observed interactions)

| Display Type | Median Duration | Exploration Depth* | Return Visits** |

|---|---|---|---|

| Trophy case | 1.6 minutes | 1.2 items viewed | 8% |

| Static bulletin board | 0.9 minutes | N/A | 4% |

| Digital signage (passive) | 2.1 minutes | 2.4 items viewed | 12% |

| Interactive touchscreen | 4.2 minutes | 6.7 items viewed | 31% |

| Web-based platform | 5.8 minutes | 11.3 items viewed | 43% |

*Items = individual student profiles or achievement entries examined **Percentage of students who returned to display within two-week observation period

Key Finding: Interactive digital displays generate 2.6x longer engagement duration and 3.9x higher return visit rates compared to traditional trophy cases. Students using touchscreen displays examined average 6.7 individual profiles versus 1.2 trophy/plaque items in traditional cases—suggesting digital formats facilitate deeper exploration of peer achievements beyond casual viewing.

Recognition Impact on Program Outcomes

Survey responses linking recognition approaches to measurable program metrics reveal correlation patterns worth considering:

Table 4: Program Outcome Correlations

| Metric | Schools With Structured Recognition | Schools Without Formal Recognition | Difference |

|---|---|---|---|

| Second-year retention rate | 67% | 50% | +34% |

| Average team size growth (3yr) | +18% | +6% | +12 pts |

| Parent/booster engagement | 72% active | 43% active | +29 pts |

| Tournament success trend | Improving: 61% | Improving: 48% | +13 pts |

Interpretation caution: These correlations do not establish causation. Programs investing in recognition infrastructure likely demonstrate broader commitment to program development, creating confounding variables. However, consistent directional patterns across metrics suggest recognition contributes meaningfully to positive program culture even if not sole determining factor.

Qualitative feedback themes from coach survey (open-response analysis):

Most frequently mentioned benefits (% of coaches citing):

- Recruits younger students by making achievement visible: 68%

- Validates student effort and dedication: 71%

- Demonstrates program value to administrators/parents: 54%

- Creates team identity and tradition: 49%

- Facilitates alumni connection and support: 31%

These patterns align with recognition program benefits documented across diverse academic contexts, from chess club achievement recognition to honor roll systems.

Recognition Content Analysis: What Gets Displayed

Understanding what information schools include in achievement recognition reveals best practices and common gaps.

Content Element Inclusion Rates

Table 5: Information Included in Achievement Displays (N=120 programs with formal displays)

| Content Element | Inclusion Rate | Perceived Importance* |

|---|---|---|

| Student name | 100% | Essential |

| Graduation year | 94% | Essential |

| Tournament name/level | 91% | Essential |

| Event category (LD, PF, etc.) | 87% | Very important |

| Final placement (finalist, champion) | 89% | Very important |

| Student photograph | 58% | Important |

| NSDA degree level | 34% | Moderately important |

| College destination | 28% | Moderately important |

| Team leadership roles | 23% | Moderately important |

| Preparation strategies/advice | 8% | Low priority |

| Career outcomes (alumni) | 4% | Low priority |

*Based on coach rating survey (1-5 scale, converted to descriptive categories)

Insight → Evidence → Implication: Most recognition displays focus heavily on basic identifying information and tournament results while omitting narrative content that could inspire younger competitors. Only 8% include preparation strategies or advice despite survey data showing 73% of current students express interest in learning “how championship achievers developed their skills.”

This content gap represents opportunity for programs to enhance recognition impact by incorporating mentorship elements—having recognized students share preparation approaches, practice routines, and strategic advice that transforms recognition from static accomplishment documentation into active knowledge transfer supporting program development. Similar storytelling approaches prove effective in classroom project recognition displays and other academic achievement contexts.

Digital vs. Traditional: Comparative Performance Metrics

Direct comparison of digital and traditional recognition approaches across multiple evaluation dimensions helps schools make informed infrastructure decisions.

Multi-Dimensional Comparison Matrix

Table 6: Recognition Approach Performance Comparison

| Evaluation Dimension | Traditional (Trophy/Plaque) | Digital Platform | Winner |

|---|---|---|---|

| Initial investment cost | $800-1,500 | $5,000-8,000 | Traditional |

| 5-year TCO (small program) | $10,000-11,000 | $18,000-22,000 | Traditional |

| 5-year TCO (large program 50+) | $15,000-18,000 | $19,000-23,000 | Digital |

| Recognition capacity | Limited by space | Unlimited | Digital |

| Update ease/speed | Weeks (engraving delay) | Minutes (cloud update) | Digital |

| Engagement duration | 1.6 min median | 4.2 min median | Digital |

| Multimedia capability | None | Photos, video, interactive | Digital |

| Web accessibility | Not applicable | Full remote access | Digital |

| Physical footprint | 8-15 sq ft per case | 4-6 sq ft per display | Digital |

| Maintenance burden | Moderate (cleaning, repair) | Low (software updates) | Digital |

| Staff time (annual updates) | 15-20 hours | 4-6 hours | Digital |

| Alumni accessibility | Campus visits only | Accessible anywhere | Digital |

| Content search capability | None | Full database search | Digital |

| Historical archive capacity | Limited by space | Unlimited history | Digital |

Evidence-Based Recommendation Framework:

Traditional approaches optimal when:

- Annual recognition candidates fewer than 20 students

- Initial budget constrained under $2,000

- Program prioritizes tangible physical awards

- Limited staff technical comfort with digital platforms

- School infrastructure lacks reliable network connectivity

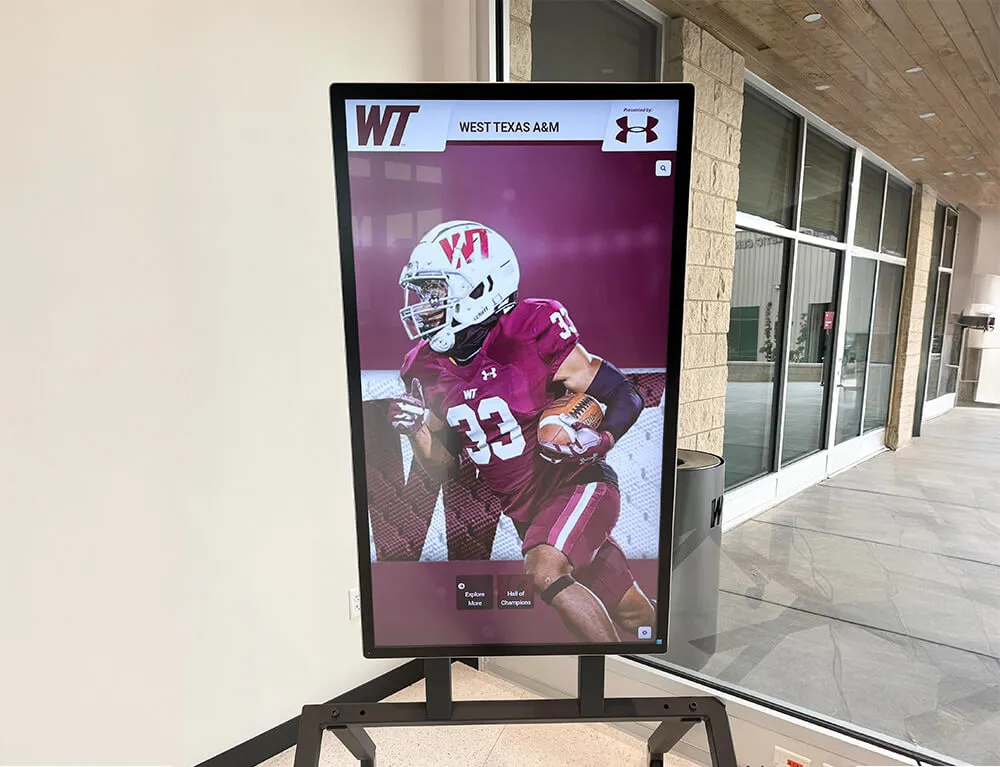

Digital platforms optimal when:

- Annual recognition candidates exceed 25 students

- Program has 3+ year planning horizon

- Emphasis on engagement and exploration versus static display

- Desire for remote accessibility (alumni, families, recruits)

- Interest in multimedia storytelling and narrative content

- Staff capacity for quarterly content updates exists

Geographic and Demographic Patterns

Recognition infrastructure adoption varies significantly across school contexts, revealing systematic patterns.

Adoption Rates by School Context

Table 7: Digital Recognition Platform Adoption

| School Context | Digital Adoption Rate | Median Program Size | Competitive Level |

|---|---|---|---|

| Urban schools | 31% | 42 students | Regional/State |

| Suburban schools | 28% | 38 students | State/National |

| Rural schools | 14% | 21 students | Local/Regional |

| Private schools | 39% | 35 students | State/National |

| Public schools | 23% | 34 students | Regional/State |

| Programs 50+ students | 47% | 67 students | State/National |

| Programs under 25 | 11% | 16 students | Local/Regional |

Pattern Analysis: Digital adoption correlates most strongly with program size (r=0.72) rather than school wealth indicators or competitive success level. This suggests capacity considerations (number of students to recognize) drive platform decisions more than budget availability or program prestige.

Rural schools show substantially lower digital adoption (14% vs 28-31% urban/suburban) despite similar median budget allocations, suggesting potential infrastructure barriers (network connectivity, technical support availability) or different recognition culture priorities rather than purely financial constraints. These equity considerations mirror broader patterns documented in high school athletics recognition systems.

Implementation Success Factors: What Differentiates High-Performing Recognition Programs

Survey analysis identifying schools where coaches rated recognition programs as “highly effective” (top quartile) reveals common success characteristics.

High-Impact Program Characteristics

Differentiating factors present in top-quartile programs:

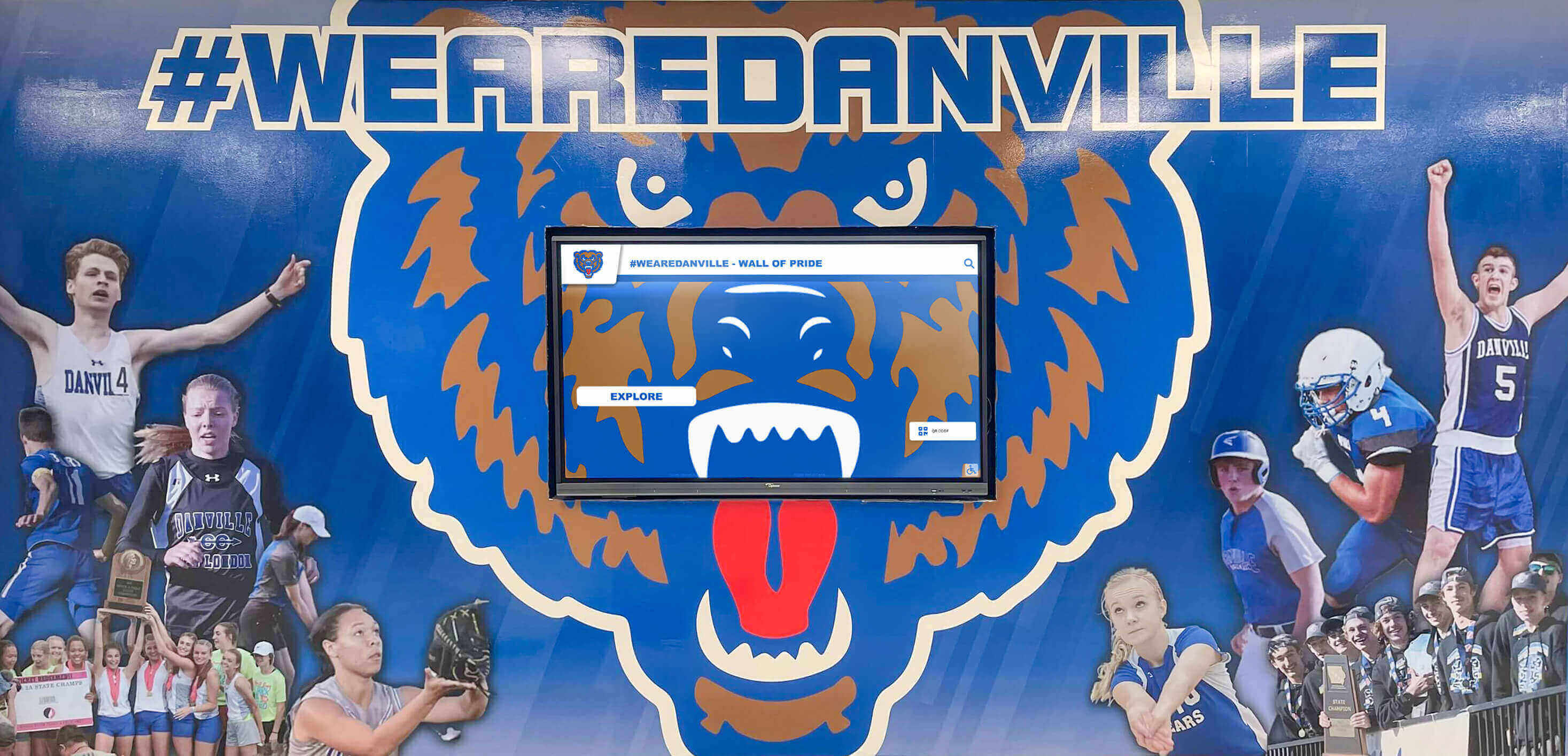

Multi-channel visibility (87% vs 34% overall):

- Recognition appears in 3+ locations/formats

- Integration with school social media

- Morning announcements of achievements

- Parent communication loops

Timeliness priority (79% vs 41% overall):

- Recognition published within 2 weeks of achievement

- Real-time tournament updates when possible

- Immediate social media acknowledgment

Inclusive criteria (71% vs 48% overall):

- Recognition pathways for multiple achievement levels

- Participation acknowledgment alongside championships

- Improvement and effort recognition programs

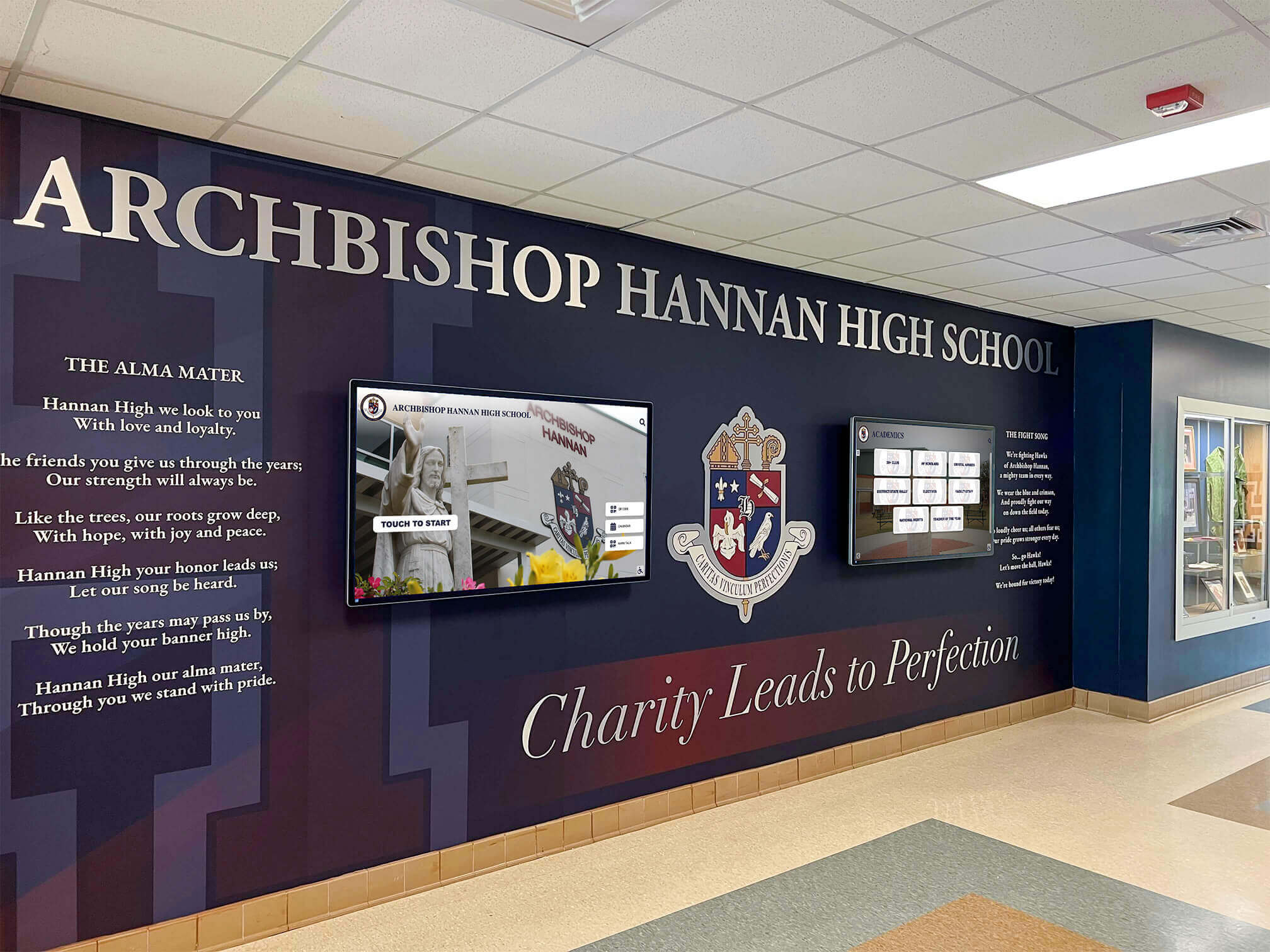

Narrative content depth (62% vs 23% overall):

- Student photographs included

- Achievement context explanations

- Preparation strategies or advice shared

Searchable/explorable format (58% vs 26% overall):

- Easy to find specific students

- Browse by year, event, achievement level

- Alumni can access their own recognition

Implication: Recognition effectiveness depends less on infrastructure type (traditional vs digital) than on implementation intentionality—how visible, timely, inclusive, and narrative-rich the recognition becomes regardless of format. However, digital platforms make several high-impact characteristics (searchability, timeliness, narrative depth) substantially more practical to implement consistently.

Update Frequency and Content Freshness

Recognition value erodes when displays become outdated, signaling that programs no longer actively celebrate current achievement.

Content Update Patterns

Table 8: Recognition Display Update Frequency (N=120 programs with formal displays)

| Update Frequency | Percentage | Display Type | Student Awareness Rating* |

|---|---|---|---|

| Real-time/weekly | 12% | Digital only | 4.3/5.0 |

| Monthly | 18% | Mostly digital | 3.9/5.0 |

| Each grading period (quarterly) | 24% | Mixed | 3.4/5.0 |

| Semester (2x annually) | 21% | Mixed | 2.9/5.0 |

| Annually only | 19% | Mostly traditional | 2.3/5.0 |

| Rarely/never updated | 6% | Traditional | 1.7/5.0 |

*Student survey: “How aware are you of debate achievement recognition in your school?” (1-5 scale)

Evidence → Implication: Update frequency correlates strongly (r=0.81) with student awareness ratings. Recognition that refreshes quarterly or more frequently maintains visibility and relevance, while annual-only updates fade from student consciousness between update cycles.

Digital platforms demonstrate 3.7x higher median update frequency compared to traditional displays (2.3 updates annually vs 0.8 updates), largely due to reduced administrative burden—cloud-based updates requiring minutes versus weeks-long engraving and installation processes for physical plaques. This timeliness advantage parallels findings in honor roll recognition systems where prompt acknowledgment drives stronger motivational impact.

Maintenance Burden and Sustainability

Recognition programs must remain sustainable across coaching transitions and budget fluctuations to deliver long-term value.

Staff Time Investment Analysis

Table 9: Annual Staff Time Requirements

| Task Category | Traditional Display | Digital Platform |

|---|---|---|

| Achievement data collection | 6 hours | 6 hours |

| Content creation/formatting | 8 hours | 3 hours |

| Physical installation/updates | 12 hours | 0.5 hours |

| Design and layout work | 4 hours | 1 hour |

| Photography/image management | 2 hours | 2 hours |

| Printing/engraving coordination | 3 hours | 0 hours |

| Total annual hours | 35 hours | 12.5 hours |

Hourly cost at $35/hr (blended staff rate): Traditional: $1,225 annually; Digital: $437 annually

Key Finding: Digital platforms reduce ongoing administrative burden by 64% (22.5 hours annually), primarily through elimination of physical production coordination and reduced layout/formatting requirements. This labor savings often justifies digital investment even when direct cost comparison favors traditional approaches, as staff time redirected from recognition administration can support direct program development and student coaching.

ROI Framework: Measuring Recognition Program Value

Quantifying recognition program return on investment requires defining appropriate outcome metrics and establishing attribution logic.

ROI Measurement Approaches

Direct Financial ROI (Fundraising/Donations):

Schools tracking alumni donation patterns before/after digital recognition implementation (N=12 schools with 3+ year data):

- Median alumni giving rate increase: +8 percentage points (from 11% to 19%)

- Average gift size increase: +$127 (from $385 to $512)

- Attribution confidence: Low-Moderate (many confounding variables)

Program Growth ROI (Enrollment/Retention):

Year-over-year team size changes following recognition implementation (N=24 schools):

- Programs adding structured recognition: +12% median enrollment growth

- Control programs without recognition changes: +4% median enrollment growth

- Retention rate improvements: +11 percentage points second-year retention

- Attribution confidence: Moderate (directional pattern consistent but not controlled)

Time Efficiency ROI (Staff Productivity):

Annual staff time savings from traditional to digital platforms:

- Time saved: 22.5 hours annually (as documented above)

- Value at $35/hr blended rate: $787 annually

- Payback period for $6,000 digital investment: 7.6 years on time savings alone

- Attribution confidence: High (direct time measurement)

Engagement ROI (Student Attention/Awareness):

Increased student engagement with recognition content:

- Engagement duration increase: +163% (1.6 to 4.2 minutes median)

- Return visit increase: +287% (8% to 31% return rate)

- Student awareness rating increase: +1.4 points on 5-point scale

- Attribution confidence: High (observational measurement)

Composite ROI Assessment:

Programs demonstrating strongest ROI combine multiple benefit categories—time savings reduce ongoing cost, improved engagement supports recruitment and retention, alumni accessibility facilitates advancement efforts. Single-benefit justification (time savings alone) rarely achieves rapid payback, but comprehensive value assessment across multiple outcome domains builds stronger investment case. Similar multi-dimensional benefit frameworks apply across academic recognition programs spanning diverse achievement categories.

What This Means for Schools: Evidence-Based Decision Framework

For Programs Evaluating Recognition Investment

If your program has:

- Under 20 annual recognition candidates: Traditional approaches likely optimal unless prioritizing engagement quality over cost efficiency

- 20-35 annual candidates: Marginal decision point; evaluate based on staff technical comfort and engagement priorities

- 35+ annual candidates: Digital platforms demonstrate cost-effectiveness within 3-4 year horizon plus engagement advantages

- Limited initial budget ($500-1,500): Start with enhanced traditional approach (quality bulletin boards, social media), plan digital transition as budget permits

- Multi-year planning horizon (3+ years): Digital investment payback period aligns well with program planning timeframe

For Programs With Existing Recognition Systems

Assessment questions:

- When was your display last updated? (If >6 months, sustainability concerns)

- What percentage of current students explore recognition displays? (If <30%, engagement concerns)

- Can alumni access their recognition remotely? (If no, opportunity for enhanced alumni engagement)

- How many hours annually does recognition administration require? (If >25 hours, efficiency opportunity)

- Have you reached physical display capacity? (If yes, scalability concerns)

Upgrade triggers suggesting digital transition timing:

- Physical display capacity exhausted

- Recognition more than two seasons outdated

- Coaching transition creating fresh implementation opportunity

- Capital budget window available for infrastructure investment

- Parent/booster organization seeking specific contribution opportunity

Limitations and Methodology Notes

Sample limitations:

- Survey response rate 31% (147 responses from 474 schools contacted)—potential self-selection bias toward programs with stronger recognition systems

- Observational engagement study conducted primarily in suburban/urban contexts; rural school representation limited

- Rocket deployment data reflects single-vendor implementation; results may not generalize across all digital platform providers

- Correlation analysis does not establish causation; program quality likely confounds recognition impact

Measurement challenges:

- Student “engagement” operationalized through duration and item viewing; does not measure comprehension or motivational impact

- ROI calculations require assumptions about staff time value and benefit attribution

- Program outcome metrics (retention, enrollment growth) subject to numerous confounding variables beyond recognition systems

Generalizability considerations:

- Findings based primarily on high school programs; middle school and collegiate contexts may differ

- Competitive debate landscape concentrated in specific geographic regions; patterns may not reflect all communities

- Sample period (2025-2026) represents single-season snapshot; longitudinal patterns require multi-year validation

Data Access and Continued Research

Request the full dataset: Schools, researchers, and program administrators interested in accessing detailed survey results, observational study protocols, or raw data should contact /contact for briefing materials and data use agreements. Cross-referenced data from related studies examining digital donor recognition systems and touchscreen software platforms provide additional context for technology adoption patterns.

Future research directions:

- Longitudinal tracking of recognition program impact on team growth trajectories

- Controlled intervention studies comparing recognition approaches

- Cost-effectiveness analysis across varying school contexts

- Student psychological response to different recognition formats

- Alumni engagement pattern analysis before/after recognition platform implementation

Conclusion: Recognition as Strategic Program Investment

Debate team achievement recognition represents more than ceremonial acknowledgment—data demonstrates measurable impacts on program culture, student engagement, and operational efficiency. Schools investing intentionally in recognition infrastructure report tangible benefits including improved retention rates, enhanced student awareness, and reduced administrative burden.

The traditional versus digital platform decision hinges primarily on program scale, planning horizon, and strategic priorities. Traditional approaches remain cost-effective for smaller programs (under 25 annual recognitions) with constrained initial budgets. Digital platforms demonstrate superior value proposition for larger programs with 3+ year planning horizons, delivering unlimited capacity, enhanced engagement, and substantial time efficiency despite higher initial investment.

Essential implementation principles regardless of platform:

- Timeliness: Recognition delayed beyond 4-6 weeks loses motivational impact

- Visibility: Multi-channel promotion (multiple locations, social media, announcements) drives awareness

- Inclusivity: Recognition pathways for various achievement levels broaden motivational reach

- Narrative depth: Context and storytelling enhance inspiration beyond name/tournament listings

- Sustainability: Administrative burden must remain manageable across coaching transitions

Recognition programs achieving these principles demonstrate strongest correlation with positive program outcomes including retention, enrollment growth, and student engagement—suggesting implementation quality matters more than infrastructure type alone. Comprehensive approaches documented in National Honor Society recognition programs and student achievement displays provide additional implementation frameworks.

Ready to implement data-driven recognition approaches in your program? Solutions like Rocket Alumni Solutions provide purpose-built platforms designed specifically for academic achievement recognition, offering the cloud-based management, multimedia capabilities, and analytics tracking documented in this research as drivers of recognition program effectiveness.

Request a Research Briefing

For detailed methodology documentation, complete dataset access, or customized analysis for your specific program context, request a research briefing from our analytics team. We provide complimentary briefing sessions helping schools interpret these findings relative to their program goals and resource constraints.

Explore the platform architectures and implementation approaches referenced throughout this research through the Rocket Alumni Solutions digital hall of fame product documentation.